Elasticsearch in Java projects – index and read documents

Nowadays market puts a huge demand for projects on efficient searching and analyzing capabilities of the big volume data. The answer on this is using Elasticsearch, that can be easily integrated into Java projects. In the series of articles I would like to present how Elasticsearch can be used in practice by developing a simple demo project that will use Elasticsearch features step by step.

This first article presents how to index or read documents using Java High Level Rest Client. This is official client, written and supported by Elasticsearch.

What is Elasticsearch?

Elasticsearch is an open-source search engine developed in Java that is built on top of Apache Lucene, a high-performance full-text search-engine library. It allows users to store, search and analyze data efficiently and gives the response in JSON format in near real-time. Over the years Elasticsearch, mainly due to its performance, has become the most popular search engine. It is commonly used not only by big companies like Wikipedia, Netflix or developers’ favourite Stack Overflow, but by the small startups as well.

The Elasticsearch organizes the data expressed in JSON format called documents in the index, which is the collection of documents of the same type. Each document is identified by its unique ID whereas the index is identified by its name.

Prerequisites

- Java 8 or higher

- Installed and running Elasticsearch (refer to official user guide)

Project setup

About

The idea is to develop a simple backend-for-frontend service that provides the data about formula 1 drivers. The service provides REST API so that indexed data can be either refreshed or retrieved. At the beginning the Driver objects shown below will be indexed and retrieved.

class Driver {

String driverId;

String code;

String givenName;

String familyName;

LocalDate dateOfBirth;

String nationality;

boolean active;

Integer permanentNumber;

}

Maven configuration

Once the Elasticsearch is already working, required dependencies shown below need to be added pom.xml. For this project version 7.15.2 is chosen.

<elasticsearch.version>7.15.2</elasticsearch.version>

<dependency>

<groupid>org.elasticsearch.client</groupid>

<artifactid>elasticsearch-rest-high-level-client</artifactid>

<version>${elasticsearch.version}</version>

</dependency>

<dependency>

<groupid>org.elasticsearch</groupid>

<artifactid>elasticsearch</artifactid>

<version>${elasticsearch.version}</version>

</dependency>

Project properties

For the convenience some elastic and project related properties were added to application.yml. It is declared that the documents will be stored in db-drivers index.

elasticsearch:

host: localhost

port: 9200

index:

name:

drivers: "db-drivers"

Java API

There are many ways to talk to Elasticsearch. Next to REST API, many programming languages have their official clients, provided by Elasticsearch, for Java we have the High Level REST Client.

High Level REST Client

High Level REST Client accepts request objects and returns response objects for the most important APIs like e.g. info, get, index, delete, update, bulk or search. The RestHighLevelClient is built on top of the REST low-level client builder. The following code shows how to initialize the RestHighLevelClient with previously created a low level RestClientBuilder in Spring applications. Each APIs in High Level REST Client can be called synchronously or asynchronously. In this article there is a focus on synchronous call which returns either a response object or in case of failure throw an IOException.

@Configuration

public class ElasticsearchConfiguration {

@Value("${elasticsearch.host}")

private String elasticsearchHost;

@Value("${elasticsearch.port}")

private int elasticsearchPort;

@Bean

RestHighLevelClient restHighLevelClient() {

RestClientBuilder restClientBuilder = RestClient.builder(new HttpHost(elasticsearchHost, elasticsearchPort));

return new RestHighLevelClient(restClientBuilder);

}

}

Indexing documents

Depending on the project requirements documents can be stored using different approaches. On the one hand there can be used only one index created once at the beginning, where new documents are added or updated once they change. This is useful for cases where not full scope of data is ingested. On the other hand, when there is a scenario to always ingest full data, each time the new index can be created in order to provide some auditing possibilities. Each of those has its advantages and drawbacks and, as was mentioned before, the choice can be driven by the project requirements.

In the demo application the second approach was chosen. Each time new index will be created with name enriched by timestamp suffix like db-drivers-20211222-122343.

The process of indexing documents is divided into three steps:

- Create index

Creating new index requires only building the CreateIndexRequest object with some additional configuration e.g. setting is added if needed. This prepared request is then used by the client when calling create method. You can find setting the numbers of shards and replicas below.

Shortly shard is a Lucene index that contains a subset of the documents stored in the Elasticsearch index. The number of shards depends on many factors like: the amount of data, queries etc. There are two types of shards, the primary shard and a replica, or copy. Each replica is located on different node. That assures the data is available if another node has failure.The defaults for both shards and replicas is 1.

CreateIndexRequest createIndexRequest = new CreateIndexRequest(indexName)

.settings(Settings.builder()

.put("index.number_of_shards", NO_OF_SHARDS)

.put("index.number_of_replicas", NO_OF_REPLICAS)

.build());

client.indices().create(createIndexRequest, DEFAULT);

- Index documents

Once the index exist the documents can be stored. For each data object the ‘IndexRequest’ is created with the given indexName and then the current data is passed to it as a map. In order to make it efficient via only one call to Elasticsearch, the ‘BulkRequest’ is used. At first each of the IndexRequest as added to BulkRequest instance and once the bulk request is prepared, the client.bulk() method is invoked.

try {

BulkRequest bulkRequest = new BulkRequest().setRefreshPolicy(WAIT_UNTIL);

data.forEach(it -> {

// It converts an instance of data class to map because IndexRequest accepts a map as a source.

Map<string, object=""> source = objectMapper.convertValue(it, new TypeReference<>() {

});

bulkRequest.add(new IndexRequest(indexName).source(source));

});

BulkResponse bulkResponse = client.bulk(bulkRequest, DEFAULT);

if (bulkResponse.hasFailures()) {

throw new ElasticsearchStoreException(bulkResponse.buildFailureMessage());

}

} catch (IOException e) {

throw new ElasticsearchStoreException(e);

}

- Assign alias ‘Alias’ is a kind of secondary name that can be used to refer to an index or multiple indices. It means we always refer to the index assigned to the alias no matter what is the real index name. This functionality is provided by rolling the alias from already assigned indices to the new one. The first step is to find all existing indices under specific alias using ‘GetAliasRequest’. Next all previously found indices are disconnected from alias. Finally, the alias is assigned to the new index. Each of this activity is defined in

AliasActionthat is added toIndicesAliasRequst. At the end theupdatedAliases()method ofclientis invoked with already prepared alias request.

IndicesAliasesRequest indicesAliasesRequest = new IndicesAliasesRequest();

// find all existing indices under specified alias

String[] assignedIndicesUnderAlias = client.indices().getAlias(new GetAliasesRequest(indexNameAsAlias), DEFAULT)

.getAliases().keySet().toArray(String[]::new);

// unassign all previously found indices

if (assignedIndicesUnderAlias.length > 0) {

AliasActions unassignAction = new AliasActions(REMOVE).indices(assignedIndicesUnderAlias).alias(indexNameAsAlias);

indicesAliasesRequest.addAliasAction(unassignAction);

}

// assign the newly created index to specified alias

AliasActions assignAction = new AliasActions(ADD).index(indexName).alias(indexNameAsAlias);

indicesAliasesRequest.addAliasAction(assignAction);

// invoke client command

client.indices().updateAliases(indicesAliasesRequest, DEFAULT);

In the next paragraph the documents will be retrieved from the index so that the activity will be verified.

Reading documents

When all documents are in the index now it is time to read all of them out of it. Firstly, the SearchSourceBuilder is created, where it is important to increase size parameter. The default value is 10 so that only first 10 documents are returned. Next, the source builder is passed to the instance of SearchRequest together with the name of source index so that it is defined what and from the documents are taken.

try {

// Prepare search request

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

searchSourceBuilder.size(1000); // by default query returns 10

SearchRequest searchRequest = new SearchRequest();

searchRequest.indices(indexName);

searchRequest.source(searchSourceBuilder);

// invoke clint command to get search response

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

} catch (IOException e) {

throw new ElasticsearchReadException(e);

}

Finally, the hits taken from the search response that is the result of calling search() method of the client is parsed to output objects.

List<t> fetchedList = stream(searchResponse.getHits().getHits())

.map(it -> toOutputDocument(it, typeReference))

.collect(toList());

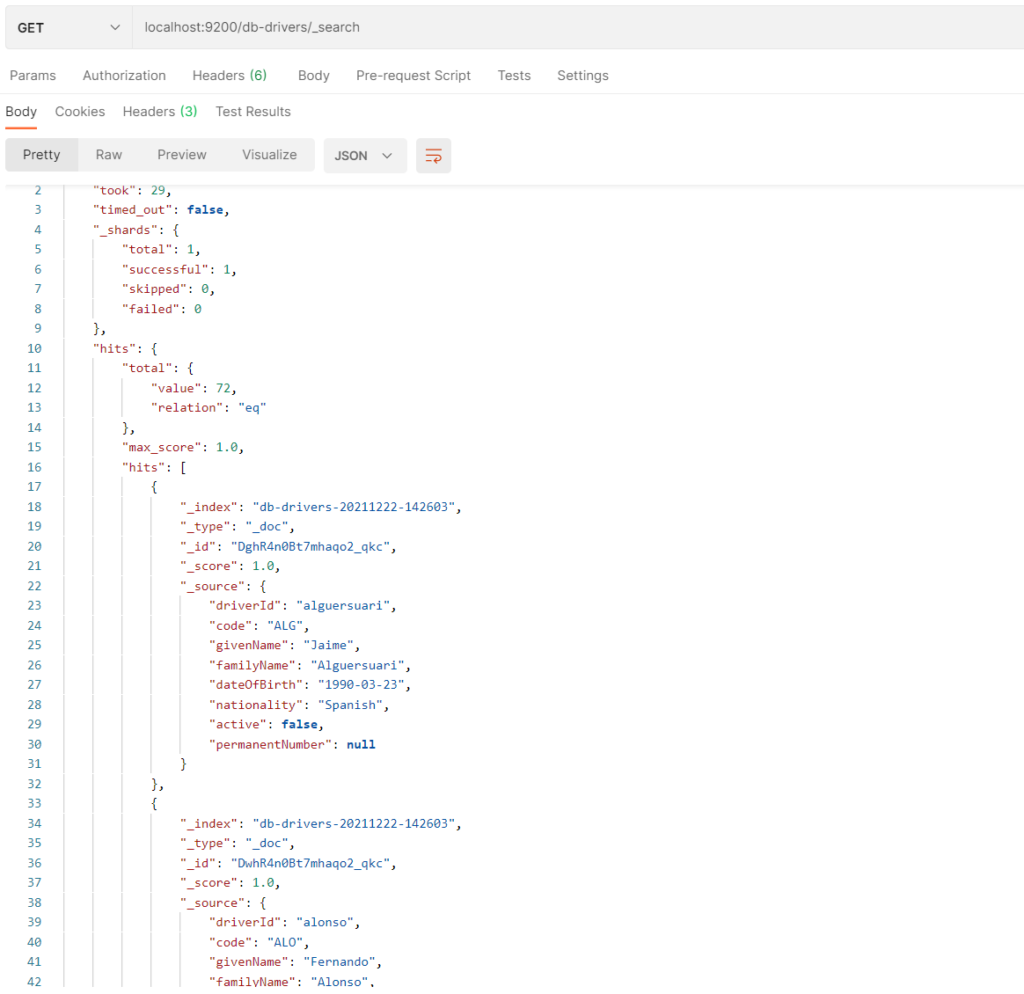

By using the REST API /db-drivers/_search the result shown below can be achieved.

Elasticsearch in Java -Summary

As was shown integrating the Elasticsearch to the project is pretty straight forward. It just requires small amount of the configuration and with usage of Java Api Client indexing and retrieving documents is not troublesome either.

Reference

Meet the geek-tastic people, and allow us to amaze you with what it's like to work with j‑labs!

Contact us