Bottlenecks identification with perf

This article presents how to easily profile a Linux system application with the perf tool. Additionally, you will learn how to visualize gathered data as interactive graphic.

Why should we always try to improve application performance?

An Answer to this question might seem obvious, but people tend to ignore real consequences of poorly written application in terms of performance. Especially in a business environment, wasted cycles on some common operations can cause a huge slowdown. We should always remember that hardware resources are not infinite and such mistakes always increase the cost of application running at desired efficiency. For example when it is some backend server, you would need to buy more machines or rent more resources from a cloud platform. On the other hand we have mobile and desktop apps where a weak performance lowers user experience and makes your program less competitive on the market.

How do performance issues arise?

Developers regularly add new lines of code to the project they are currently working on, but sometimes it is hard to predict how much they can impact application performance. Things like unnecessary iterations, object copies or database access can slide through review and increase the amount of time needed for requested operations. The Rule of thumb would be to measure how the new code changes impact time. This sounds rather easy when the application is tiny, or you just have to check one change. I usually compare elapsed times of a few test scenarios and add some timers if needed. If you are working on a major commercial project you probably have proper performance tests in releasing process so a brief check before pushing the change to repository should be fine. I will get back to evaluating a new code later on, but now let’s talk about a different scenario. You develop a relatively new, but already large application which maybe grew on some prototype or just adding new features had the highest priority so far and nobody cared too much about how well it performs. After some time it is pointed out that it got really slow with all those new things added and something needs to be done about that. Let’s also assume that there were no proper performance tests, or they were recently added to the project as the focus so far was on bringing new features. In a later part of this article I will try to show how to profile an old code which can have tens of modules and thousands of functions to find potential places for optimizations in Linux system applications.

Perf Linux profiler

To identify such places we will use the perf Linux profiler. To put it simply this tool allows us to periodically look up what our application is currently doing and save this result to a file. There are many options and minor tools associated with perf so I will not to delve into them and just go with simple example, to give you an idea what information you can get from such analysis. Firstly we need something to analyze, so I wrote a very simple program in C++.

void increment_to_3mln()

{

for(auto i = 0; i < 3000000; ++i)

++i;

}

void increment_to_10mln()

{

for(auto i = 0; i < 10000000; ++i)

++i;

}

int main()

{

for(auto i = 0; i < 10000; ++i)

{

increment_to_3mln();

increment_to_10mln();

}

}

It only has two functions, one increments the variable to 3 million and the other to 10 million. Now let’s run perf and attach it to this program process. We can do this by calling

perf record -F 99 -g -p <pid>

This is going to record call stacks from all threads at a given sampling. Let’s go through the options I have passed to the perf record call.

- -F 99, this tells

perfto record 99 samples per second, - -g, enables call-graph (stack chain/backtrace) recording, we will talk more about this while presenting results of our analysis,

- -p \, specifies the process identifier

If the command is successful it should dump the binary perf.data file. Now the simplest way to get some human-readable data is to call perf report which gives us the following output

Samples: 4K of event 'cycles', Event count (approx.): 171643302607

Children Self Command Shared Object Symbol

+ 100.00% 0.00% perf_example.ou libc-2.17.so [.] __libc_start_main

+ 100.00% 0.00% perf_example.ou perf_example.out [.] main

+ 76.90% 76.74% perf_example.ou perf_example.out [.] increment_to_10mln

+ 23.10% 23.00% perf_example.ou perf_example.out [.] increment_to_3mln

0.26% 0.04% perf_example.ou [kernel.kallsyms] [k] apic_timer_interrupt

0.22% 0.00% perf_example.ou [kernel.kallsyms] [k] smp_apic_timer_interrupt

main function was present in 100% of samples and increment_to_10mln took a bit more than 3 times of what increment_to_3mln needed so that’s exactly what should be expected. The last two rows are some kernel events that you can filter out by adding the -e parameter with some arguments to perf record command. This way of presenting results may seem fine for such a simple program, but usually that is not the case. In order to have a better view of what our application does we are going to create a visualization of gathered data. To make it possible we added -g option to perf record command, so now we have all information about stack chain/backtraces . Now we will use an external set of Brendan D. Gregg scripts available at Flamegraph github to create an interactive graphic with all the data.

If we want to do that, we need to call

perf script > <file>

instead of perf report. This command interprets binary data from perf record and writes it to file in a readable, but in a bit different format than perf report does as it produces lines with successive call stacks and numbers of times they were registered. For next step we use stackcollapse-perf.pl script from the link given before.

./stackcollapse-perf.pl <perf_script_outfile> > <stackcollapse_outfile>

Now we grouped all similar call stacks under one record. One thing that is left to do is to generate an actual file.

./flamegraph.pl <stackcollapse_outfile> > <png>

Link to example interactive flamegraph svg

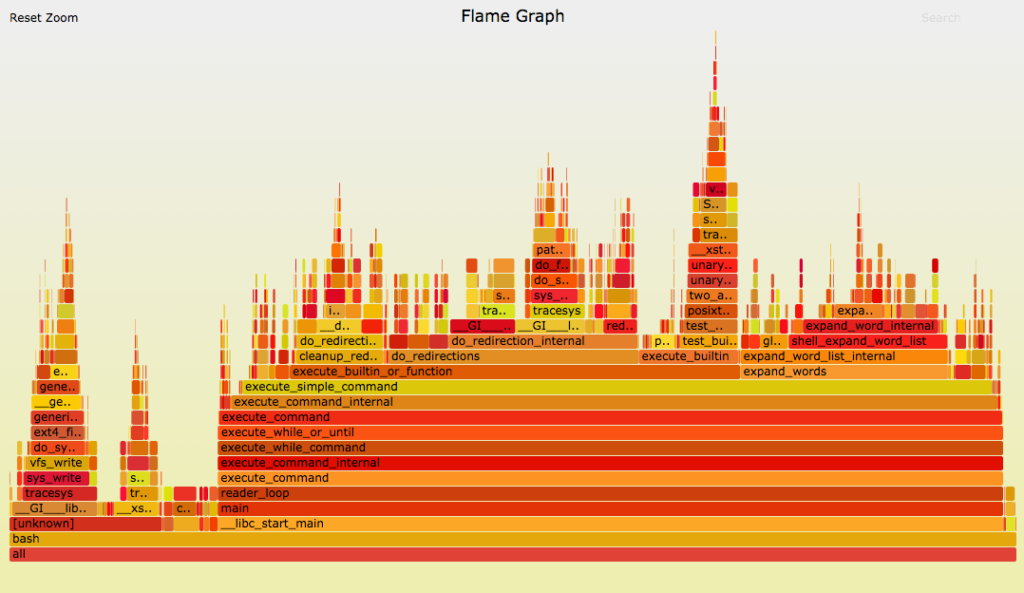

As you can check under the link above allows you to zoom into an interesting function, and has a search tool which additionally can sum up function calls from different call paths, so you can see the total time of a chosen function execution. My example is very simple, and it might not be worth going through all the trouble to get visualization but take a look at the one from the official flamegraph site.

When you have a complex application on your hands it is a lifesaving ability to have such a clear view on where time has actually been spent. With that you can identify old performance problems easily without a the need to add your own profiling to the code. It is also a good idea to incorporate graph generation to continuous integration, with that detection of performance drops can be done by comparing current results with previous ones.

Summary

Optimizing the application is rarely easy and identifying performance issues in the code without proper profiling can consume tons of effort. Perf helps you find places with potential performance issues and takes some work off your shoulders. Identifying these places is the first step, but a crucial one. The better you know the project you are working on, the easier you can confirm what and how can it be optimized.

References

Poznaj mageek of j‑labs i daj się zadziwić, jak może wyglądać praca z j‑People!

Skontaktuj się z nami