Python – setting up the environment

Python is a multi-paradigm, general purpose interpreted high level language with dynamic typing, automatic memory management.

Introduction

Python is a multi-paradigm, general purpose interpreted high level language with dynamic typing, automatic memory management. Its design philosophy emphasizes code readability. Python, due to ‘batteries included’ approach to the standard library, is a perfect language to replace shell scripts in more complex cases requiring availability of external tools where portability becomes an issue. Over the years it proved its applicability in other areas as well – especially web applications – and became a language of choice for lots of projects around the globe. Python does not have a steep learning curve and is relatively easy to get started with. This simplicity is delusive and often leads to bad designs.

In this article I will try to describe a few typical use-cases. It is focused on GNU/Linux systems.

Isolation levels

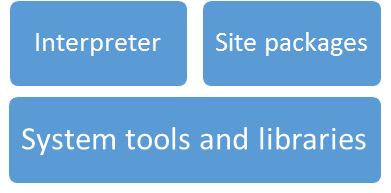

As Python gained popularity every major Linux distribution started to ship with at least one Python interpreter version preinstalled. Some of them (RHEL, Centos, Gentoo) even depend on Python for their internal tools. This means that setting up most basic environment to start using Python does not require any action. What are the main components of such environment?

Interpreter is one of many Python interpreters available (CPython, Jython, Pypy) along with its core modules forming the standard library. Here I will focus on reference CPython implementation that is written in C. By site packages I mean all the extra modules and packages (collections of modules) that were made available to the interpreter by any means. Both interpreter and site packages depend this way or another on various system tools and libraries. This dependency may be strict (interpreter will not run, module will not import) or feature-based (readline support, database backend availability).

On top of this there is a user code with complexity ranging from a hello world one-liner to full-fledged web application with computationally intensive backend that has its own set of requirements – it needs a set of packages installed, often requires specific versions and is compatible with a subset of Python interpreters available. Yet it must co-exist with other applications and tools (for instance on developer’s machine) and/or neither affect nor be affected by the operating system itself which especially important in a production environment. This creates the need to isolate the whole environment or at least all the conflicting parts from the system and other unrelated components.

Use-case: simple system script

Isolation level: none

We’ll start from an edge-case where not having separate environment is actually what you aim for. Imagine you are a system administrator on a YUM-based distribution like Centos or RHEL and you want to enumerate 10 largest packages installed. As YUM is Python-based there is a system-wide installed yum package with well-defined API you may use without the need for any shell magic.

# yum_app.py

import yum

yum = yum.YumBase()

yum.setCacheDir()

packages = yum.rpmdb.returnPackages()

packages.sort(key=lambda pkg:pkg.size, reverse=True)

for position, package in zip(range(1,11), packages):

print ("#%02d - %s\n %s, version %s [%5.1f MB]" % (

position, package.summary, package.name, package.version, package.size / 1024**2

))Use-case: additional Python packages

Isolation level: site-packages

Now imagine you have decided to enhance your previous script, allow user to specify other conditions for package filtering, maybe add some search option and a web interface, of course. While you can write a bare-bones web service using bare Python standard library it is better to use some well-known framework and some libraries that do the dirty work. You end up with dependencies that you need to install somehow in the system. There are a few bad ways to do that including system-wide installation if you have root access or setting up PYTHONPATH. The proper way is to use virtualenv and pip to create isolated site-packages for installing all the dependencies in.

You can install virtualenv using whatever package manager your distribution uses. Chances are that it is installed already with Python. If you plan to use Python 3 you can use python –m venv instead.

Virtual environment is simply a directory that contains new site packages and a copy of python interpreter specified upon its creation. It can inherit system site packages (as would be desirable in this example since access to yum package is necessary) or give a completely fresh start.

Let’s convert the script to a poor man’s web app:

import yum

from flask import Flask, Response

class PackageManager(object):

def __init__(self):

self.pkg = yum.YumBase()

self.pkg.setCacheDir()

def biggest(self, count):

packages = self.pkg.rpmdb.returnPackages()

packages.sort(key=lambda pkg:pkg.size, reverse=True)

for position, package in zip(range(1,count+1), packages):

yield "%02d. %s %s [%.1f MB]" % (

position, package.name, package.version, package.size / 1024**2

)

yum = PackageManager()

app = Flask(__name__)

@app.route('/biggest/<int:count>')

def biggest(count):

return Response("\n".join(yum.biggest(count)), content_type="text/plain")

if __name__ == '__main__':

app.run()Now create a proper virtualenv in my-yum-app directory attached to python2.7 interpreter:

$ virtualenv --system-site-packages -p python2.7 my-yum-app

$ source my-yum-app/bin/activate # convenience step that sets up PATH

(my-yum-app) $ pip install flask

(my-yum-app) $ deactivateNow it is possible to run the application from within the virtual environment just by:

$ my-yum-app/bin/python yum_app.pyPackages installed in the virtual environment have precedence over those installed system-wide.

Compare module search paths of system-wide python and inside virtual environment:

$ python -c 'import sys; print("\n".join(sys.path))'Hint: it might be good idea to make code under development installable (provide proper setup.py) on the earliest stage and install it in the virtual environment with -e flag. This way it will be always accessible under package name, not only from the top-level directory (or with PYTHONPATH hacks).

Use-case: multiple development environments

Isolation level: python interpreter + site packages

Managing virtual environments becomes an important issue on developers’ boxes. There are several tools designed to deal with this ranging from simple shell wrappers like virtualenvwrapper and autoenv to whole distributions like Anaconda or ActivePython. Development environments like PyCharm IDE are often shipped with a built-in virtual environment management utility.

One of the simplest and yet powerful solutions is pyenv. It has virtually no dependencies itself which makes it easy to bootstrap. For a start all one needs is just a shell and network access. Later a working compiler to build requested Python interpreters will be necessary. pyenv works by creating small wrapper scripts for every executable that appears in each environment including pip and interpreter itself and then running proper executable based on user choices.

Installation uses GIT, but unpacking archives downloaded from GitHub works as well:

$ git clone https://github.com/yyuu/pyenv.git ~/.pyenv

$ git clone https://github.com/yyuu/pyenv-virtualenv.git ↵

~/.pyenv/plugins/pyenv-virtualenv

$ export PYENV_ROOT="$HOME/.pyenv"

$ export PATH="$PYENV_ROOT/bin:$PATH"

$ eval "$(pyenv init -)"

$ eval "$(pyenv virtualenv-init -)"Last four commands can be placed in a shell initialization file. These are required to use some pyenv features including interpreter installation, but not necessary to directly use the environments.

After installation is completed one can display a list of interpreters available for install:

$ pyenv install -lCurrently the list includes over 250 interpreters in different versions including CPython from 2.1.3 to 3.7-dev, anaconda, ironpython, jython, pypy, and stackless. Let’s create environment for yum_app.py:

$ pyenv install 3.5.2

$ pyenv virtualenv 3.5.2 my-yum-app

$ pyenv shell my-yum-app

$ pip install Flaskpyenv shell command sets PYENV_VERSION variable to the name of the environment which makes this particular virtual environment default one for the current shell. One can make such choice globally for the current user by setting pyenv global. Very useful is pyenv local as it creates a file named .python-version that sets the environment for directory it is placed in and all its descendants.

In PyCharm pyenv-created environment can be used by specifying full path to the interpreter. In this case it would be ~/.pyenv/versions/3.5.2/envs/my-yum-app/bin/python.

Use-case: isolated & relocatable environments

Isolation level: system tools and libraries + python interpreter + site packages

Many external packages have complex system dependencies and the environment setup is complicated because of the need to satisfy these dependencies. In some cases these dependencies are internal for the team/company or highly customized. There are situations where needed system libraries conflict with other system elements or lack some specific compile-time enabled features which makes them hard to set up system-wide. Often it is also desirable to have several copies of completely reproducible environment without the need to create it every time – standard python virtual environments are not easily relocatable (that means they usually cannot be moved to a different path and still work reliably). Dedicated environment variables (like proxies), mount points or system-wide configuration may as well be a part of the environment setup.

While there are several generic tools like Vagrant probably the simplest way is to use Docker directly.

Make sure you have Docker installed and running. Using following simplistic Dockerfile:

FROM python:3

# RUN apt install … && pip install … && wget … && …

WORKDIR /workdirBuild the image and create new container running your code:

$ docker build . -t environment

$ docker run -it --rm -v .:/workdir environment python …This way one gains:

- Complete environment with as many separate, exact copies as needed

- Free sandboxing to a directory of choice, full control over what to expose

- Possibility to easily bootstrap and link to all necessary services as well

- A way to create a development environment that matches the production

Wrap-up thoughts

As usual, your mileage may vary and despite Zen of Python there is no generally accepted, single, best way how to set up the environment – using system python will do in many simple cases while building docker image may be actually an overkill and unnecessary complication. De-facto standard is virtualenv and except for really simple cases without external dependencies it should be used. Beyond that managing multiple virtual environments and interpreters is usually quite a personal task and depends on habits and general attitude of the user. If your setup is complex and building the environment is not trivial the choice might not be to automate this process, but to try to simplify it.

Simple is better than complex.

Tim Peters, The Zen of Python

Complex is better than complicated.

Poznaj mageek of j‑labs i daj się zadziwić, jak może wyglądać praca z j‑People!

Skontaktuj się z nami