Resource dependencies and modularization in Terraform

If you haven’t had a chance to do it yet – have a look at my Introduction to Terraform article, where you will learn how to set up the Google Cloud project and configure Terraform on your local machine to be able to play with some resources.

Resource

Previously we used code similar to this one:

resource "google_storage_bucket" "default" {

name = "terraform-sandbox-302013-my-storage"

}

to instruct Terraform to create the Cloud Storage bucket.

As you can see, the resource definition is fairly simple: the resource keyword, followed by a type of resource and a local name (will become useful soon), then the resource attributes in curly braces. But what exactly happens when we call the terraform apply command? Terraform compares the current state of infrastructure (real, existing resources present in our project) with the configuration written in the .tf file. If the resource exists in the project but does not exist in configuration – it will be removed. If the resource is present in the configuration but missing in the project – it will be created. There is also a third possibility – the resource exists both in the configuration and in the project but with different attributes – in such a case terraform will modify the real resource to match the configuration or destroy and recreate it (it is not always possible to amend the existing resource using provider’s API). Let’s see this behavior in practice.

We will modify the main.tf file slightly and add some labels to our bucket:

// Configure the Google Cloud provider

provider "google" {

project = "terraform-sandbox-302013"

region = "europe-west3"

}

//Create bucket

resource "google_storage_bucket" "default" {

name = "terraform-sandbox-302013-my-storage"

labels = {

my_key = "my_value",

my_key_2 = "other_value"

}

}

We can apply this configuration:

$ terraform apply -auto-approve

google_storage_bucket.default: Creating...

google_storage_bucket.default: Creation complete after 3s [id=terraform-sandbox-302013-my-storage]

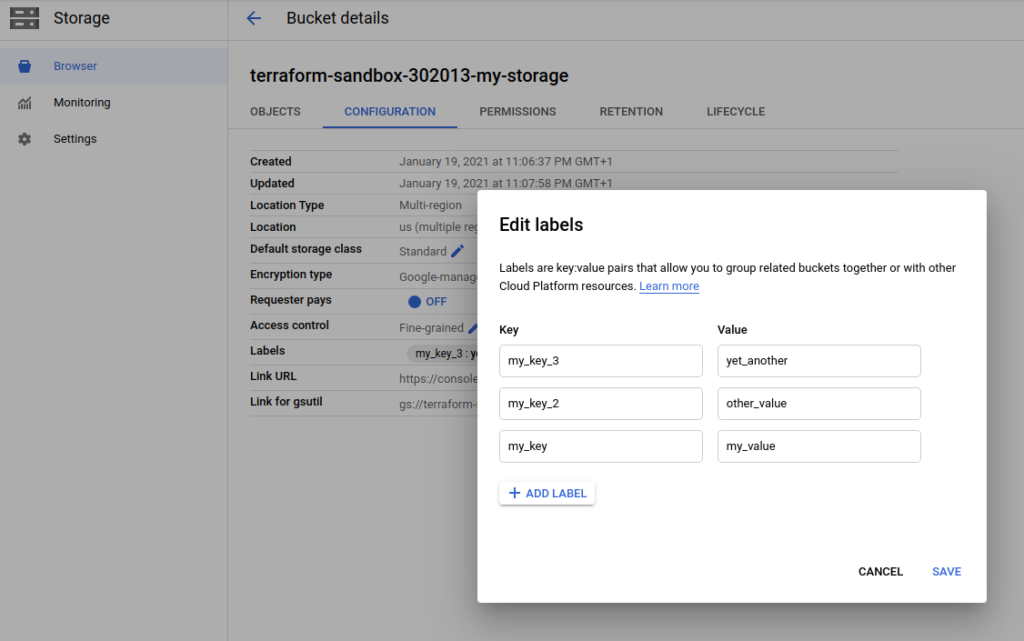

Using the web console we can confirm that the bucket was created with labels in place (they can be found in the “Configuration tab”). Let’s modify the labels using a web console:

Running the terraform plan command we can see that Terraform can detect our change, it can also update the bucket to match the configuration:

$ terraform plan

google_storage_bucket.default: Refreshing state... [id=terraform-sandbox-302013-my-storage]

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

~ update in-place

Terraform will perform the following actions:

# google_storage_bucket.default will be updated in-place

~ resource "google_storage_bucket" "default" {

id = "terraform-sandbox-302013-my-storage"

~ labels = {

- "my_key_3" = "yet_another" -> null

# (2 unchanged elements hidden)

}

name = "terraform-sandbox-302013-my-storage"

# (10 unchanged attributes hidden)

}

Plan: 0 to add, 1 to change, 0 to destroy.

------------------------------------------------------------------------

Note: You didn't specify an "-out" parameter to save this plan, so Terraform

can't guarantee that exactly these actions will be performed if

"terraform apply" is subsequently run.We can also delete the bucket using the web console – running terraform apply will recreate the resource. For the last test case, we will remove the whole resource definition from the main.tf file and run the terraform plan followed by terraform apply:

$ terraform plan

google_storage_bucket.default: Refreshing state... [id=terraform-sandbox-302013-my-storage]

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

- destroy

Terraform will perform the following actions:

# google_storage_bucket.default will be destroyed

- resource "google_storage_bucket" "default" {

- bucket_policy_only = false -> null

- default_event_based_hold = false -> null

- force_destroy = false -> null

- id = "terraform-sandbox-302013-my-storage" -> null

- labels = {

- "my_key" = "my_value"

- "my_key_2" = "other_value"

} -> null

- location = "US" -> null

- name = "terraform-sandbox-302013-my-storage" -> null

- project = "terraform-sandbox-302013" -> null

- requester_pays = false -> null

- self_link = "https://www.googleapis.com/storage/v1/b/terraform-sandbox-302013-my-storage" -> null

- storage_class = "STANDARD" -> null

- uniform_bucket_level_access = false -> null

- url = "gs://terraform-sandbox-302013-my-storage" -> null

}

Plan: 0 to add, 0 to change, 1 to destroy.

------------------------------------------------------------------------

Note: You didn't specify an "-out" parameter to save this plan, so Terraform

can't guarantee that exactly these actions will be performed if

"terraform apply" is subsequently run.

$ terraform apply -auto-approve

google_storage_bucket.default: Refreshing state... [id=terraform-sandbox-302013-my-storage]

google_storage_bucket.default: Destroying... [id=terraform-sandbox-302013-my-storage]

google_storage_bucket.default: Destruction complete after 2s

Resource dependencies

Life isn’t always as simple as presented in the example above – resources may depend on other resources – especially on some computed attributes that aren’t known until the resource is provisioned. Consider the following example: we would like to create a bucket and after that bind two accounts with specific roles to it.

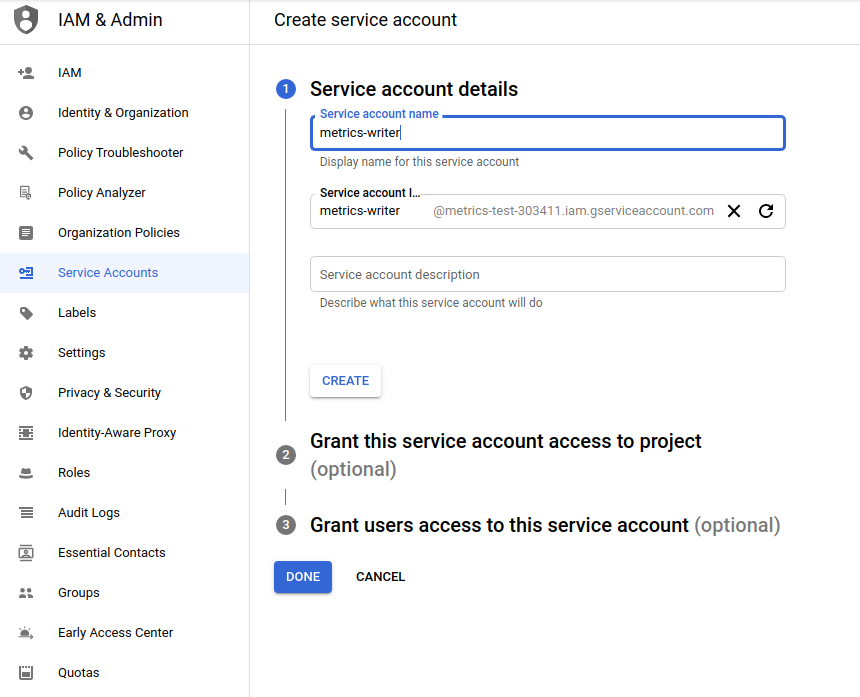

First, we will create two Service Accounts (like we do for “terraform account”) but without assigning any roles.

Next, we modify the bucket resource slightly adding uniform_bucket_level_access attribute set to true. This step is not required – generally, using uniform bucket-level access is recommended, because it unifies and simplifies how you grant access to your Cloud Storage resources. Now, we are ready to include IAM binding in our resource definition:

// Configure the Google Cloud provider

provider "google" {

project = "terraform-sandbox-302013"

region = "europe-west3"

}

//Create bucket

resource "google_storage_bucket" "default" {

name = "terraform-sandbox-302013-my-storage"

labels = {

my_key = "my_value",

my_key_2 = "other_value"

}

uniform_bucket_level_access = true

}

// bind role to the bucket

resource "google_storage_bucket_iam_binding" "admin-binding" {

bucket = google_storage_bucket.default.name

role = "roles/storage.objectAdmin"

members = [

"serviceAccount:my-bucket-admin@terraform-sandbox-302013.iam.gserviceaccount.com",

]

}

resource "google_storage_bucket_iam_binding" "viewer-binding" {

bucket = google_storage_bucket.default.name

role = "roles/storage.objectViewer"

members = [

"serviceAccount:my-bucket-viewer@terraform-sandbox-302013.iam.gserviceaccount.com",

]

}

The whole idea of resource dependencies hides behind the statement:

bucket = google_storage_bucket.default.name

As you can see, we are not referring to the bucket using its name written in code as a String value. Instead, we are using resource attribute reference in the format resource_type.resource_name.attribute. We just kill two birds with one stone – we avoid attribute duplication and “inform” terraform there is a dependency between those resources.

We are now ready apply our configuration:

$ terraform apply -auto-approve

google_storage_bucket.default: Creating...

google_storage_bucket.default: Creation complete after 2s [id=terraform-sandbox-302013-my-storage]

google_storage_bucket_iam_binding.viewer-binding: Creating...

google_storage_bucket_iam_binding.admin-binding: Creating...

google_storage_bucket_iam_binding.viewer-binding: Still creating... [10s elapsed]

google_storage_bucket_iam_binding.admin-binding: Still creating... [10s elapsed]

google_storage_bucket_iam_binding.viewer-binding: Creation complete after 12s [id=b/terraform-sandbox-302013-my-storage/roles/storage.objectViewer]

google_storage_bucket_iam_binding.admin-binding: Creation complete after 12s [id=b/terraform-sandbox-302013-my-storage/roles/storage.objectAdmin]

As you can see from the output, the bucket was created first. After the creation of the bucket has been completed terraform stared creating both bindings in parallel – as there is no dependency between them, terraform optimizes the creation process and saves our time.

Modules

Our code works correctly but it doesn’t look like something reusable. To improve it we will use Terraform variables. The variable definition and usage are similar to what you know from other programming languages:

variable “variable_name” {

default = “A default value which then makes the variable optional.”

type = “This argument specifies what value types are accepted for the variable.”

description = “This specifies the input variable's documentation.”

validation = “A block to define validation rules, usually in addition to type constraints.”

sensitive = “Limits Terraform UI output when the variable is used in the configuration.”

}

All variable attributes are optional. We will define all variables in new file variables.tf inside the same directory:

variable "project" {

type = string

description = "GCP project"

}

variable "region" {

type = string

description = "Region where resources will be provisioned"

}

variable "bucket_name" {

type = string

description = "Name of the bucket to be provisioned"

}

variable "bucket_labels" {

type = map(string)

description = "Labels to be assigned to the created bucket"

default = {}

}

variable "bucket_uniform_level_access" {

type = bool

description = "Enable Uniform bucket-level access"

default = true

}

variable "bucket_admins" {

type = list(string)

description = "List of identities to bind with storage/objectAdmin role"

}

variable "bucket_viewers" {

type = list(string)

description = "List of identities to bind with storage/objectViewer role"

}

We will also move provider definition to separated file called providers.tf:

// Configure the Google Cloud provider

provider "google" {

project = var.project

region = var.region

}

The main.tf file contains now only the resource definition with most of the hardcoded values replaced with variables:

//Create bucket

resource "google_storage_bucket" "default" {

name = var.bucket_name

labels = var.bucket_labels

uniform_bucket_level_access = var.bucket_uniform_level_access

}

// bind role to the bucket

resource "google_storage_bucket_iam_binding" "admin-binding" {

bucket = google_storage_bucket.default.name

role = "roles/storage.objectAdmin"

members = var.bucket_admins

}

resource "google_storage_bucket_iam_binding" "viewer-binding" {

bucket = google_storage_bucket.default.name

role = "roles/storage.objectViewer"

members = var.bucket_viewers

}

We will also create an example folder and place the input.tfvars file there with all variables values:

project = "terraform-sandbox-302013"

region = "europe-west3"

bucket_name = "terraform-sandbox-302013-my-storage"

bucket_labels = {

my_key = "my_value",

my_key_2 = "other_value"

}

bucket_admins = [

"serviceAccount:my-bucket-admin@terraform-sandbox-302013.iam.gserviceaccount.com",

]

bucket_viewers = [

"serviceAccount:my-bucket-viewer@terraform-sandbox-302013.iam.gserviceaccount.com",

]The structure of our project looks as follows:

main_folder

|- examples

| |- input.tfvars

|- main.tf

|- providers.tf

|- variables.tf

We can now apply our code by typing

$ terraform apply -var-file=./examples/input.tfvarsWhat we just did is a simple way to simply modify the result of our code without needing to modify the resource definition – just by providing a different .tfvars file. We are now ready to introduce the modules to our project.

Terraform modules are a way to structure your code better. If you have any Object Oriented programming language background, you can think of it as a class.

To use modules in our simple project we should now destroy all resources created so far (terraform destroy) and remove all hidden files from the project folder. We also change the project structure a little bit with no changes to the content of the existing files:

main_folder

|- modules

|- bucket_with_iam

|- examples

| |- input.tfvars

|- main.tf

|- providers.tf

|- variables.tf

And that’s it – we have just created a module named bucket_with_iam which can be used many times in different places of our project (even in other modules). We can now add the main.tf file to our project (in main_folder) and use the module:

module "first" {

source = "./modules/bucket_with_iam"

project = "terraform-sandbox-302013"

region = "europe-west3"

bucket_name = "terraform-sandbox-302013-my-storage"

bucket_labels = {

my_key = "my_value",

my_key_2 = "other_value"

}

bucket_admins = [

"serviceAccount:my-bucket-admin@terraform-sandbox-302013.iam.gserviceaccount.com",

]

bucket_viewers = [

"serviceAccount:my-bucket-viewer@terraform-sandbox-302013.iam.gserviceaccount.com",

]

}

As you can see, using modules is fairly simple – the keyword module followed by our local name for module tells Terraform that we will be using a module, the source variable specifies where this module can be found (there are a few options, local directory, version control repository, network location, even bucket) and all required values of variables. Please note that variables in input.tfvars file are not in use right now! It is good practice when creating modules to provide such files, just to give users a general idea about what should be the variable value. When you use terraform plan you will see that the output of the common is exactly the same. This code will create one storage bucket and two IAM bindings. If we need two buckets with different IAM bindings we can simply reuse the existing module and provide different variables:

module "first" {

source = "./modules/bucket_with_iam"

project = "terraform-sandbox-302013"

region = "europe-west3"

bucket_name = "terraform-sandbox-302013-my-storage"

bucket_labels = {

my_key = "my_value",

my_key_2 = "other_value"

}

bucket_admins = [

"serviceAccount:my-bucket-admin@terraform-sandbox-302013.iam.gserviceaccount.com",

]

bucket_viewers = [

"serviceAccount:my-bucket-viewer@terraform-sandbox-302013.iam.gserviceaccount.com",

]

}

module "second" {

source = "./modules/bucket_with_iam"

project = "terraform-sandbox-302013"

region = "europe-west3"

bucket_name = "terraform-sandbox-302013-my-other-storage"

bucket_labels = {}

bucket_admins = [

"serviceAccount:my-bucket-admin@terraform-sandbox-302013.iam.gserviceaccount.com",

"serviceAccount:my-bucket-viewer@terraform-sandbox-302013.iam.gserviceaccount.com",

]

bucket_viewers = [

]

}

At this moment nothing stops us from parameterizing our root module (yes, our root folder is also a module technically speaking) to make it more reusable and use it to create even more complicated solutions.

Summary

In this article, we have learned what Terraform modules are and how we can use them to provide complex solutions without code duplication.

Links:

https://registry.terraform.io/providers/hashicorp/google/latest/docs/resources/storage_bucket

https://registry.terraform.io/providers/hashicorp/google/latest/docs/resources/storage_bucket_iam

https://cloud.google.com/storage/docs/access-control/iam-roles

Poznaj mageek of j‑labs i daj się zadziwić, jak może wyglądać praca z j‑People!

Skontaktuj się z nami